The Dawn of the Digital Age: The Invention of the First CPU

In the landscape of computing, the Central Processing Unit (CPU) is the cornerstone that has propelled the digital revolution. The journey to the first CPU began with foundational discoveries and inventions that set the stage for this groundbreaking technology.

Silicon’s Role and the Birth of Transistors

It all started in 1823 when Baron Jons Jackob Berzelius discovered silicon, a crucial component of modern processors. However, it wasn’t until over a century later, in 1947, that John Bardeen, Walter Brattain, and William Shockley invented the first transistor at Bell Laboratories. This invention was so significant that the trio was awarded the Nobel Prize in Physics in 1956.

Intel 4004: The World’s First Microprocessor

The culmination of these advancements led to the introduction of the world’s first microprocessor, the Intel 4004, on November 15, 1971. Designed by Federico Faggin, Masatoshi Shima, Marcian Edward Hoff, and a team at Intel, the 4004 was a 4-bit processor capable of performing 60,000 operations per second. It marked the beginning of a new era in computing, revolutionizing not just microprocessor design but the entire integrated circuit industry.

The Evolution of CPUs

Since the release of the Intel 4004, CPUs have undergone tremendous evolution, becoming exponentially more powerful and efficient. From the 8-bit processors of the 1970s to the multi-core powerhouses of today, CPUs continue to be the driving force behind computing innovation.

The invention of the first CPU was a watershed moment that transformed the technological landscape. It laid the groundwork for the modern computer and continues to influence the evolution of technology. As we look back on this history, we can appreciate the ingenuity and foresight of the pioneers who made the digital age possible.

The creation of the first CPU. For a more detailed exploration, numerous resources are available that delve into the intricate history of computer processors.

A Deep Dive into the Evolution of the CPU

The Central Processing Unit (CPU), often referred to as the brain of the computer, has undergone a remarkable evolution since its inception. The journey of the CPU is a testament to human ingenuity and the relentless pursuit of technological advancement.

The Precursors to the CPU

Before the CPU came into existence, several key developments laid the groundwork for its creation. In 1823, Baron Jons Jackob Berzelius discovered silicon, which would become a fundamental component of modern processors. Nikola Tesla’s patent of electrical logic circuits, known as “gates” or “switches,” in 1903, was another precursor to the CPU.

The Transistor: A Revolutionary Invention

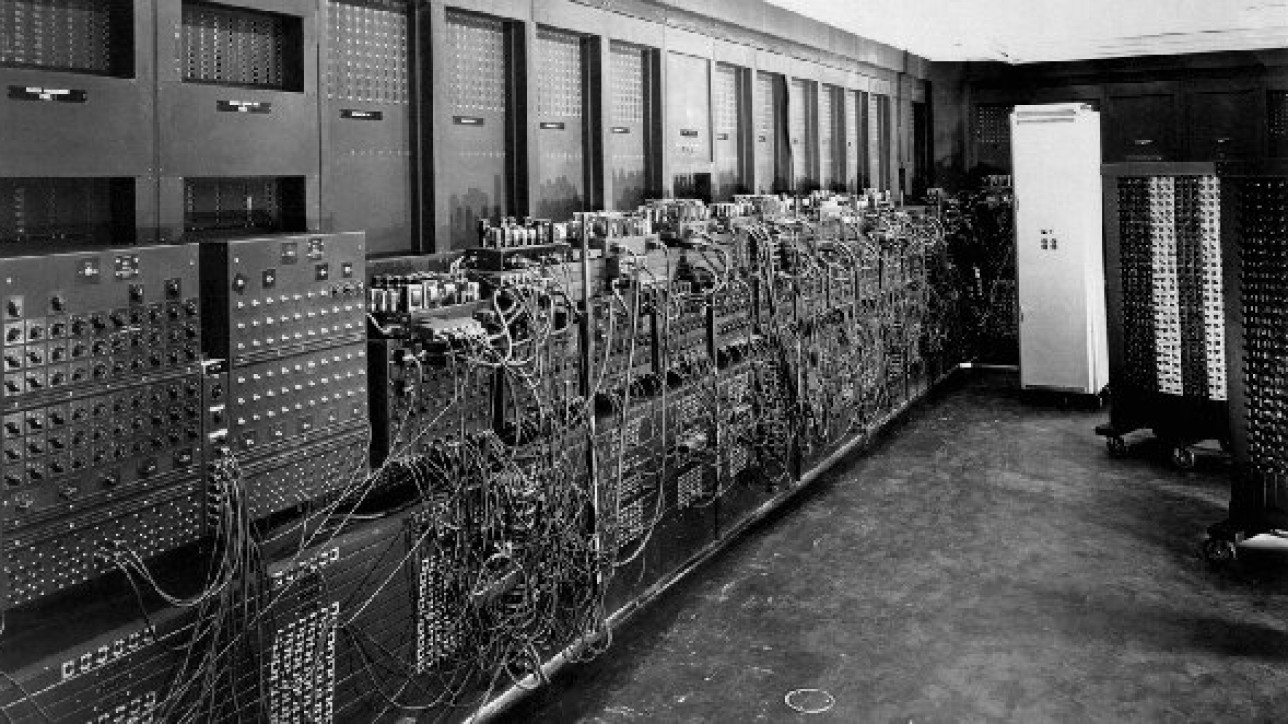

The invention of the transistor by John Bardeen, Walter Brattain, and William Shockley in 1947 at Bell Laboratories marked a pivotal moment in the history of computing. This innovation earned them the Nobel Prize in Physics in 1956 and replaced the bulky and less reliable vacuum tubes previously used in computing machines.

The Integrated Circuit: A Leap Forward

The development of the integrated circuit (IC) by Robert Noyce and Jack Kilby in 1958 was a significant leap forward, allowing for more complex and compact electronic devices. Geoffrey Dummer is credited with conceptualizing and building a prototype of the integrated circuit, which would later become a staple in CPU design.

The Rise of Competitors and Advancements

Following the release of the Intel 4004, other companies like AMD were founded, and competition in the CPU market began to heat up. Processors like the Intel 8080, introduced in 1974, became a standard in the computer industry, and the MOS Technology 6502, released in 1975, was used in popular video game consoles and computers.

The Modern CPU

Today’s CPUs are marvels of engineering, featuring billions of transistors and the ability to perform billions of operations per second. They are the result of decades of innovation and competition, pushing the boundaries of what’s possible in computing.